This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Thomas Pethick, EPFL (LIONS) [email protected];

(2) Wanyun Xie, EPFL (LIONS) [email protected];

(3) Volkan Cevher, EPFL (LIONS) [email protected].

Table of Links

- Abstract & Introduction

- Related work

- Setup

- Inexact Krasnosel’ski˘ı-Mann iterations

- Approximating the resolvent

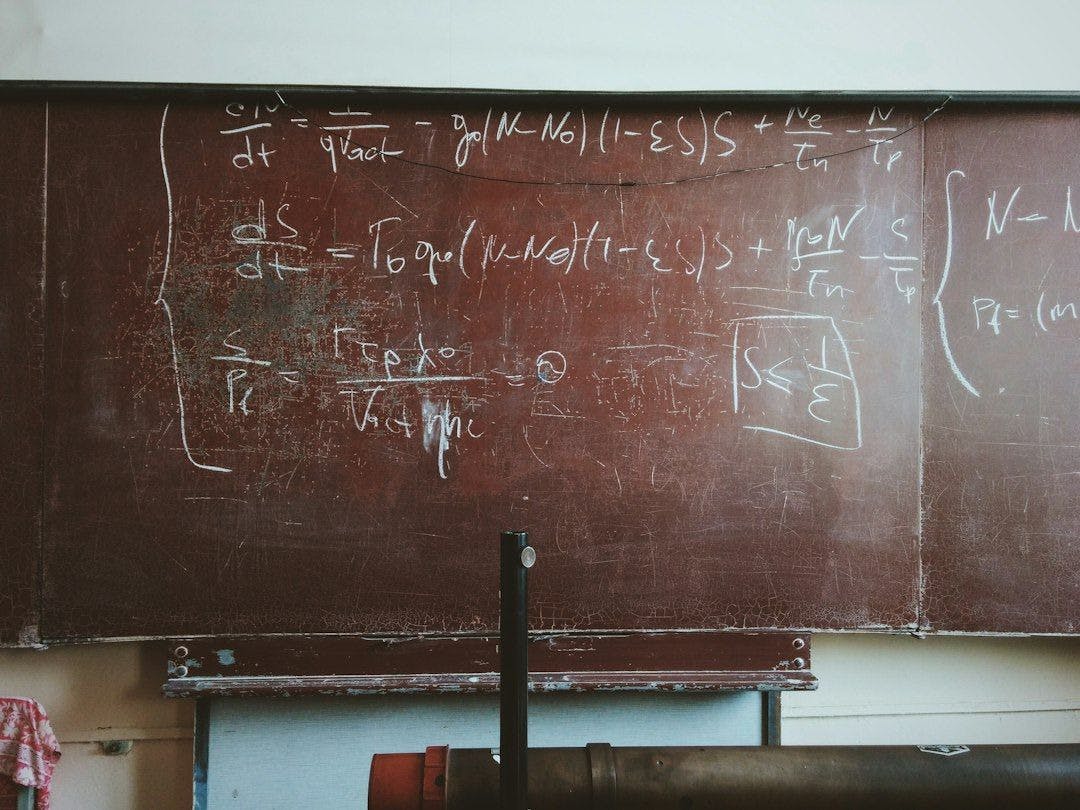

- Last iterate under cohypomonotonicity

- Analysis of Lookahea

- Experiments

- Conclusion & limitations

- Acknowledgements & References

7 Analysis of Lookahead

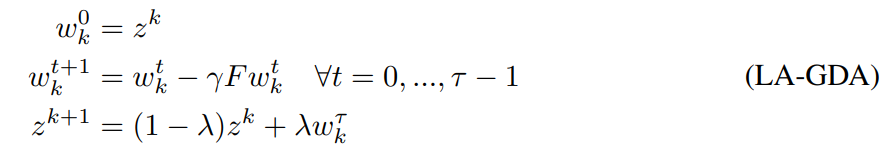

The update in RAPP leads to a fairly conservative update in the inner loop, since it corresponds to optimizing a highly regularized subproblem as noted in Section 5. Could we instead replace the optimization procedure with gradient descent ascent (GDA)? If we replace the inner optimization routine we recover what is known as the Lookahead (LA) algorithm

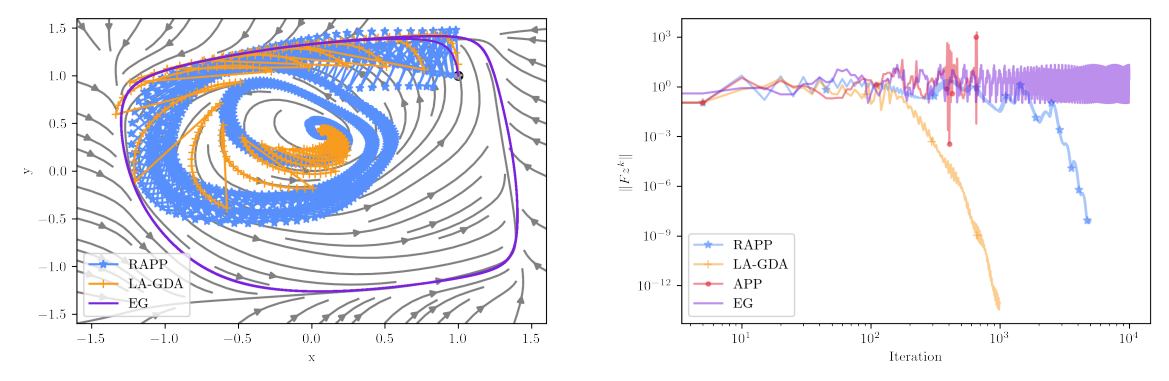

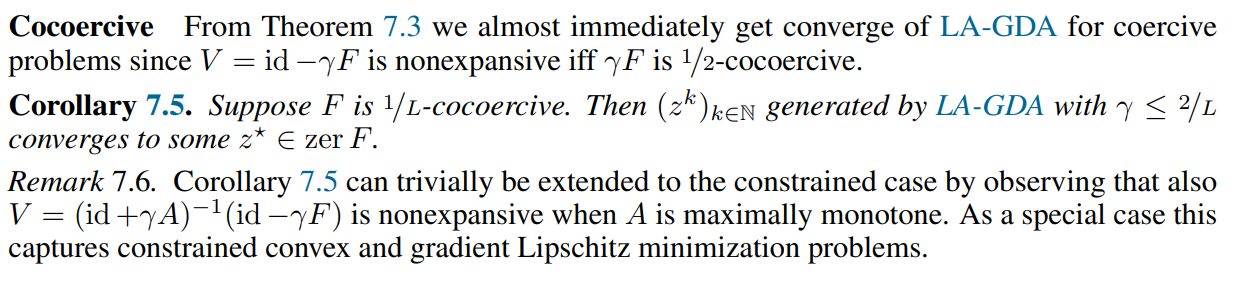

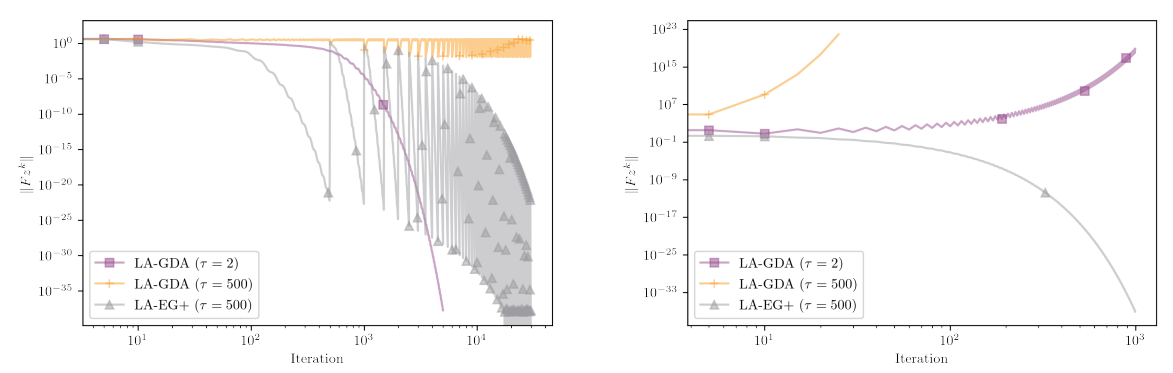

We empirically demonstrate that this scheme can converge for nonmonotone problems for certain choices of parameters (see Figure 2). However, what global guarantees can we provide theoretically?

It turns out that for LA-GDA with two inner steps (τ = 2) we have an affirmative answer. After some algebraic manipulation it is not difficult to see that the update can be simplified as follow

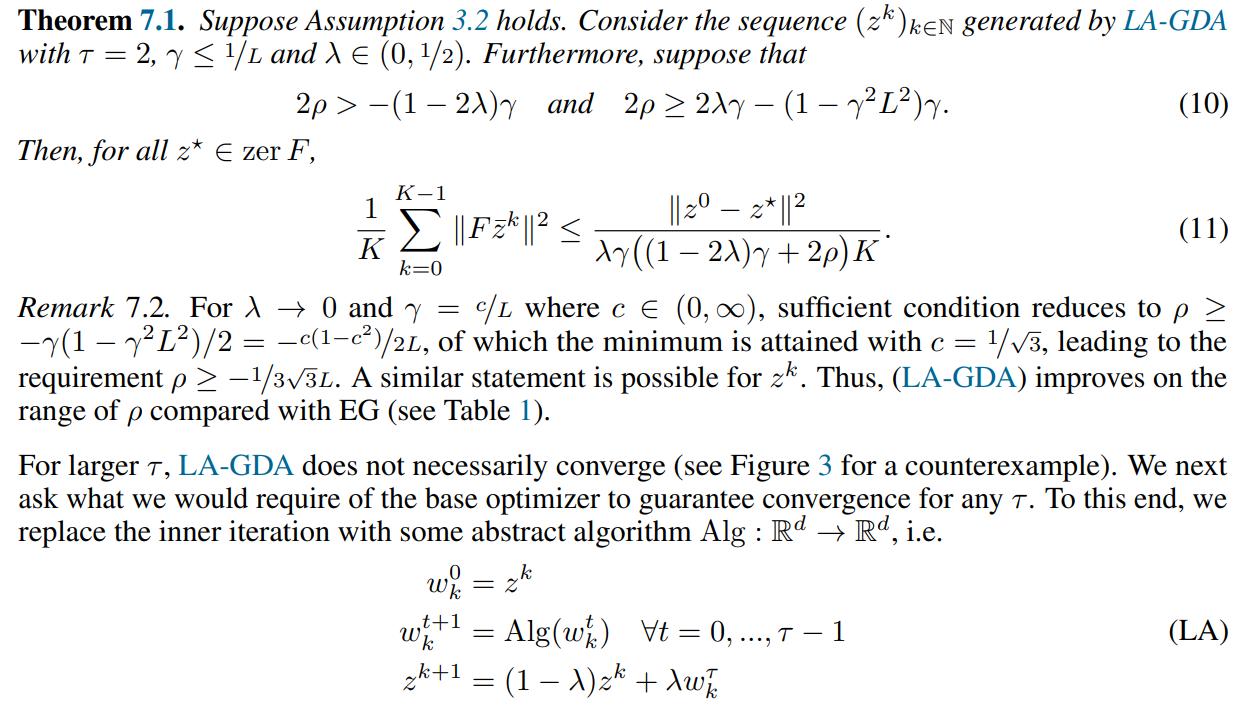

This is the average of GDA and EG+ (when λ ∈ (0, 1/2)). This observation allows us to show convergence under cohypomonotonicity. This positive result for nonmonotone problems partially explains the stabilizing effect of LA-GDA.

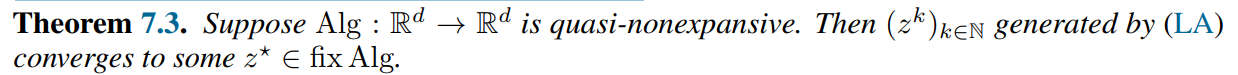

Convergence follows from quasi-nonexpansiveness.

Remark 7.4. Even though the base optimizer Alg might not converge (since nonexpansiveness is not sufficient), Theorem 7.3 shows that the outer loop converges. Interestingly, this aligns with the benefit observed in practice of using the outer iteration of Lookahead (see Figure 4).

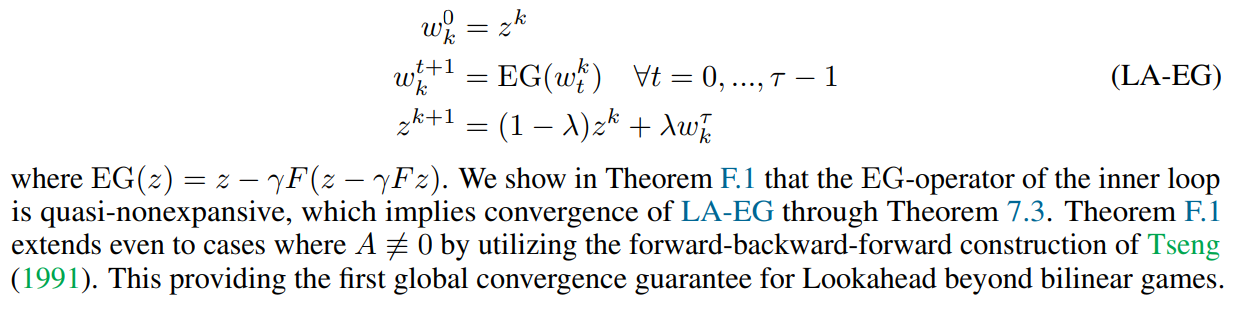

Monotone When only monotonicity and Lipschitz holds we may instead consider the following extragradient based version of Lookahead (first empirically investigated in Chavdarova et al. (2020))